The road to Safer: Equipping industry to end CSAM

July 22, 2020

7 Minute Read

From TED to Today

“Determined and nervous. In equal parts.”

This is how Thorn CEO Julie Cordua describes feeling as she stood in the wings of the TED stage on April 16 of last year. Thorn was being acknowledged as one of the 2019 TED Audacious grant winners, and Julie’s TED Talk would announce a bold new goal: Eliminate child sexual abuse material (CSAM, also known as child pornography) from the open web.

In doing so, Julie would share the knowledge Thorn had accumulated over the past near-decade of building technology to defend children from online sexual abuse, and shine a light on a topic many turn away from. And she would unveil a groundbreaking product called Safer, still in beta at Thorn at the time, that would allow tech companies of all sizes to find the toxic content that was plaguing their platforms and remove it at scale.

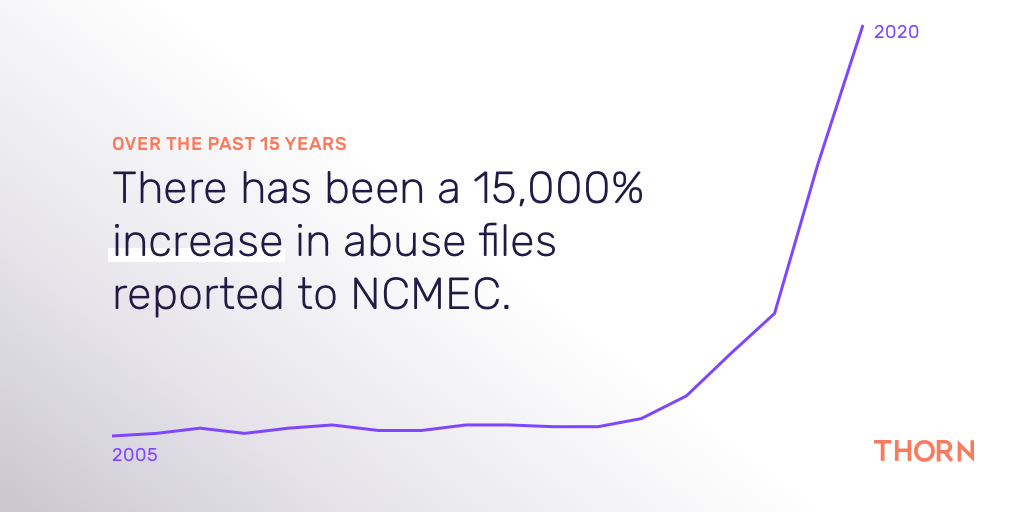

The widely reported New York Times investigative series last year exposed what Thorn had been working on for years – the spread of images and videos documenting the sexual abuse of a child had reached epidemic proportions. To date, reported files of abuse content on the open web have exploded by over 15,000% in the past 15 years, tripling between 2017 and 2019 alone. Abusers are adept at exploiting emerging technology to build communities around the harm of children, and to disrupt the growth of those communities requires a coordinated, technology-led response.

Today, Thorn is joined by Safer customers including Flickr, Imgur, Slack, VSCO, Vimeo and others as we announce the public launch of our first commercially available product.

Built by Thorn, Safer allows tech platforms to identify, remove, and report child sexual abuse material at scale, a critical step forward in the plan to eliminate CSAM shared at TED. Safer has already enabled the takedown of nearly 100,000 known CSAM files while in its beta phase, and we’re just getting started.

Equipping the Front Lines

To understand why equipping the tech industry is so critical to ending online child abuse, it helps to understand the full CSAM-removal pipeline.

Today, the vast majority of abuse material reported to The National Center for Missing and Exploited Children (NCMEC), the clearinghouse for CSAM in the United States, comes from the same websites that many of us use every day. With content-hosting platforms processing nearly 2 billion user uploads daily, the first challenge is identifying suspected abuse material in a way that is quick, comprehensive, and safe for both the employees and the larger community of users.

CSAM is so illegal we talk about it as being radioactive. In the United States, only NCMEC and law enforcement are authorized to maintain known CSAM files in the service of finding those children, investigating cases and prosecuting offenders.

Companies are not legally required to look for CSAM, but once they are made aware of it, then it must be reported to NCMEC. Companies have two ways to become aware of content like CSAM that violates their terms and conditions: either a user can report a file — in which case that user, and possibly many others before them, has been unintentionally exposed to traumatic content — or the company proactively moderates the content being uploaded to their platform in real-time.

When a company makes a report to NCMEC, the company must maintain a record of the report for 90 days while NCMEC analysts review the reports and each file, and determine which should be sent along to law enforcement. These reports support investigations and prosecutions across the country and are crucial sources of information.

The biggest tech players have navigated this complex but critical CSAM-removal process by building proprietary solutions. Yet outside of that handful of companies, most of the tech industry lacks the resources or the expertise to comprehensively address the issue. In 2019, only 12 companies made up almost 99% of all reports to NCMEC.

Even beyond identification and reporting challenges, the sharing of information on this toxic content is extremely limited. This means each image or video must be individually discovered and removed as it surfaces on platforms around the world — a global game of whack-a-mole. Extrapolated to the millions of abuse files in circulation, it is easy to see how these gaps in the system have allowed bad actors to operate undetected for far too long.

Forming a Vision

In his years building child safety tools at Facebook and Google, Thorn Product Director Travis Bright saw firsthand the inefficiencies in having companies across the sector build a similar system over and over. When Travis left Silicon Valley for a head-clearing sabbatical, he had little intention of returning. So little, in fact, that he had sold most of his belongings and was hiking the Appalachian Trail when he received an unexpected call from Julie at Thorn.

“The opportunity to come to Thorn to build an anti-CSAM tool once that could be available across the industry was what brought me back,” said Travis. “It’s amazing to see so many companies now stepping up to proactively eliminate CSAM.”

As Thorn built out a dedicated team of technical and issue experts, the idea began to crystalize. One tool enabling companies across the sector to proactively match uploaded content against known hashes (the numeric “fingerprints” used to represent CSAM that has already been identified and reported) would allow for the rapid takedown of repeat images. Meanwhile, hash sharing across the ecosystem would allow for known CSAM to be removed at scale, so that when an unknown piece of content surfaced – often indicative of a child in an active abuse situation – a virtual alarm bell would go off on the desks of child protection agents everywhere.

The tide-turning moment would come when the majority of platforms were proactively looking at the point of upload for known and unknown CSAM on their platforms, and sharing hashes so that when the documentation of a child’s abuse was found once, it could be eliminated everywhere.

#SaferTogether

Today our product serving the tech industry, Safer, includes key features to ensure the vision to end CSAM can be realized. Image and video hashing against a growing database of hashes can identify known CSAM immediately. When Safer’s first beta partner, Imgur, turned the product on, known abuse content was flagged within 20 minutes that allowed for the takedown of over 231 files from that same account.

Expanding beyond what we currently know, Safer leverages a unique machine learning classification model that targets unknown (not previously reported) files that are suspected to be CSAM. Alongside reporting to NCMEC, those new hashes can be added to SaferList, a service for Safer customers that facilitates information-sharing across the industry and strengthens the entire ecosystem with every new file found.

Moderation tools developed with both efficiency and wellness in mind allow teams to review content faster and with less redundancy, minimizing exposure to harmful content. All the child abuse content Safer can find can be reviewed and reported within a single interface, prioritizing getting critical information into the hands of front line responders so they can identify children faster and get to them sooner.

Today Just Got Safer

Time spent online has been steadily increasing over the last decade, but the past six months have certainly redefined how much of our communal living, learning, working and connecting happens on the internet. Most of us are deeply invested in protecting our homes and workplaces, our children and communities. So why would we accept anything less than a safe online environment in which to exist?

The widespread presence of documented child sexual abuse on the internet we all share is an unacceptable travesty. But today, our online world just got a little Safer. Thorn is committed to building the internet we, and our children, deserve. We invite you to join us.

[hubspot type=cta portal=7145355 id=8f1ee927-f285-4f55-b703-6daf661ae6f8]

Related Articles

Stay up to date

Want Thorn articles and news delivered directly to your inbox? Subscribe to our newsletter.